Autonomous Pelican quadcopter with Computer Vision

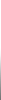

The Pelican is a quadrotor manufactured by Ascending Technologies. It is made of a flexible carbon fiber structure full of spaces and holes to attach whatever you want. This makes it perfect for experimenting with different sensors. Because, as you know, situational awareness is now the main handicap for robots, and adding extra sensors will help you take over the world. But, if you have many sensors, you will need some computing power to process all the data on time. Pelican has pretty decent brains for that, too. They ship it with an AscTec Atomboard with a 1.6 GHz Intel Atom processor, 1 GB DDR2 RAM, pre-installed Linux Ubuntu and plenty of USB ports for... yes, sensors! The Pelican you see in the picture below is the one that we used in the Computer Vision Group (CVG) to win the special prize for the "Best Automatic Performance" in the IMAV 2012 competition. But, hey, if you don't want to keep reading, watch a video instead.

Pelican quadcopter. manufactured by Ascending Technologies and modified by the CVG.

MAVwork and the first generic proxy for Linux

MAVwork is a framework that enables applications to interact with multirotors from a common API. Applications do not need to know what kind of drones are at the other side of the communication link or how to talk to them. It just opens a drone instance and starts to play with a common model. All the rest is transparent. Every drone is transformed into that common model by a proxy that deals with the drone hardware. To add support for a specific drone in MAVwork, such a proxy must be implemented and this is what I did with the Pelican.

The Pelican proxy was the second one that I programmed for MAVwork, after the AR.Drone proxy, but it was the first one to run on the drone's onboard embedded system because the AR.Drone proxy handles the drone remotely, through the manufacturer's SDK. This meant more work to do for the Pelican than for the AR.Drone to handle a lot of stuff that was transparently managed by the Parrot's SDK. In honor of efficiency, I tried to program the Pelican proxy as modular as possible, so the process of creating new proxies for other Linux-based drones in the future could benefit from this project and be released quicker. My intention was to clearly sepparate what is common to every drone —like the software architecture, communications, standard cameras and internal controllers— from what is usually unique —like interfacing with custom autopilots and sensors—. Now, in most cases, the developer only needs to provide custom modules to interface with the autopilot or read a not yet supported sensor.

Hardware modifications

MAVwork has automatic internal modes to make developers' lives a bit easier. These modes correspond to the (usually) most safety-critical actions: take-off, landing and hover. For take-off and landing the ground proximity to the propellers holds certain obvious dangers. On the other hand, having the ability during a flight to enable a fail-safe hover mode is very useful if things start to go wrong with the control app under test.

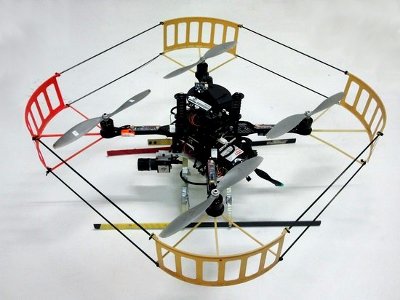

For the internal modes to work, a MAVwork's proxy implements altitude and hover controllers that need altitude and velocity estimations as inputs. Most micro aerial vehicles, like the Pelican, estimate altitude with a barometer, but the measure is neither accurate nor precise enough to safely take off, land or even hover at low altitude. This is why we installed an ultrasound sensor to get better altitude estimates. For the speed, an extra down-facing camera was attached under the drone and a visual velocity estimation module was implemented in the Linux generic proxy. This module is hardware-agnostic, so other quadrotors without native speed measurement will be able to use it in the future. Finally, a front camera was also added for multiple purposes. The front camera video stream may be fed back by MAVwork upon request of the control apps (so may be done with other connected cameras). This opens the door for applications doing monocular visual SLAM, mosaicing, human detection, face recognition, ... It is up to developers' imagination.

Bottom view of the modified Pelican. On the central line, left to right: ultrasound sensor, bottom and front cameras.

Computer Vision for velocity estimation

Ground images from the bottom camera are fed to the velocity estimation module. First, the optical flow of some selected features is computed between consecutive frames with OpenCV's implementation of the pyramidal Lucas-Kanade algorithm (cvCalcOpticalFlowPyrLK). The features are found with the Shi-Tomasi corner detector (cvGoodFeaturesToTrack). I have already seen this solution in a few videos in the Internet. However, it is tied to some natural problems due to the assumption of the drone being always parallel to the ground. Not only it can give bad estimates for high pitch or roll angles, but it can even make the drone unstable. Imagine that you have a speed-controlled drone hovering; then, you set a reference of 1 m/s forward. In the first milliseconds, the controller will make the drone pitch forward and, as the camera rotates with the drone, it will see the ground moving forward. Therefore, the estimator will think that the drone is moving backwards and —here comes the disastrous part— the controller will command an even bigger pitch forward. Yes, like the sudden voice from a distant corner in your brain just shouted, that is positive feedback, and we all know what positive feedback means to controller stability.

So, the big deal here is taking into account the drone attitude to track the real position of the ground features, not just their projections on the camera plane. What I did is modeling the camera in relation to a flat ground, according to the attitude readings from the Inertial Measurement Unit (IMU), and then projecting the features back on the floor with a pinhole camera model. In this way, for every consecutive pair of frames you get the feature positions on the floor (according to the model) and it is easy to calculate the speed. The last step is filtering the noise. At some frame pairs, there may be bad feature matchings, which results in huge displacements. My solution consisted in applying a RANSAC algorithm to discard the outliers among the displacement vector population.

The one explained above is the basic algoritm, which can be quite time-consuming for large image sizes and many features per image. Higher resolution images are needed when flying over finer-grained terrains or at higher altitudes. So, further improvements were later added to make it work in more constrained computing platforms. In summary, it is an adaptive version of the algorithm that adjusts the field of view with altitude, thanks to which a trade-off between resolution and computing power is met.

A MAVwork-based open multirotor controller

Jesús Pestana is also a member of the CVG. He wrote a great open-source multirotor controller, which runs onto MAVwork. It has a state estimator based on an Extended Kalman Filter, a customizable multirotor model and the possibility to run in velocity, position or trajectory control modes. The link to the source code is under this article, in the "Source code" section. In the following video, the trajectory controller relies only on the attitude, altitude and linear velocity measures from MAVwork to estimate the full state, including the drone's position.

The video above was presented together with a paper at The 2013 International Conference on Unmanned Aircraft Systems (ICUAS'13). The augmented reality trajectories were overlaid with my own video edition tool, which is based on camera localization algorithms from my master thesis.